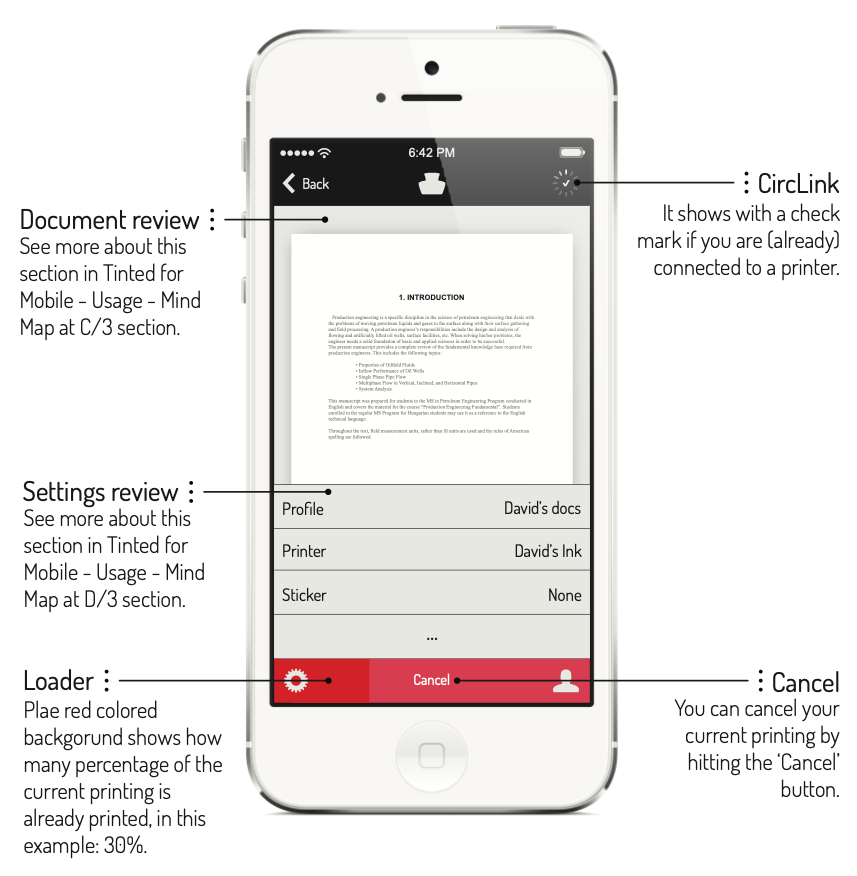

Keyboard Interactive bar-chart, Titled: Snowfall in Mammoth Mountain in 2023. This section contains additional information about this chart. To access the keyboard instructions menu, press TAB. To navigate to the chart area, press TAB again. To access the data table, use your screen reader go to table shortcut key.

Chart title: Snowfall in Mammoth Mountain in 2023

Executive Summary

Amount of new snow fell in the 2022/2023 in Mammoth Mountain, California.

Long Description

Amount of new snow fell in the 2022/2023 in Mammoth Mountain, California.

Structure

Statistical Information

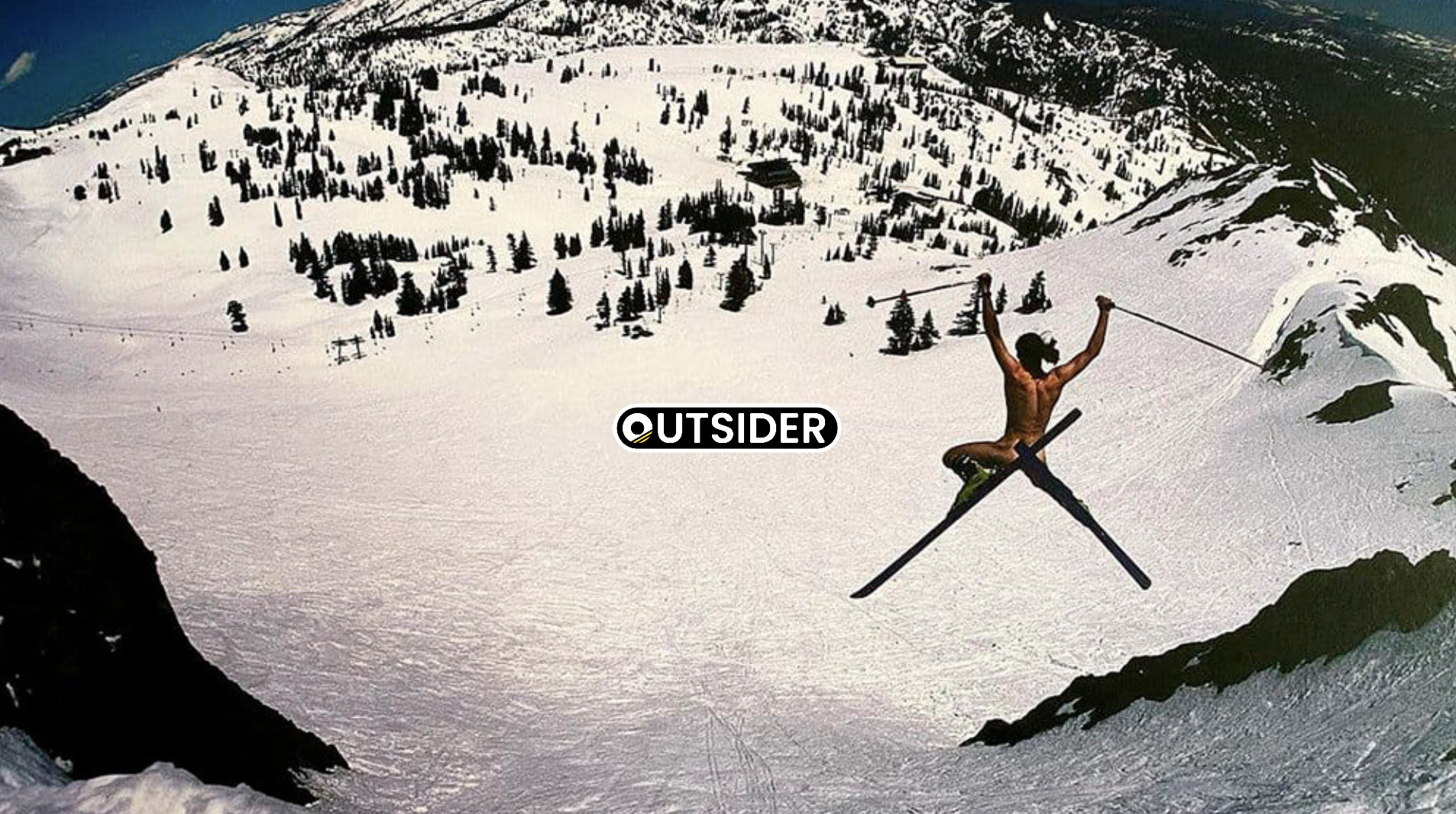

Snowfall was way above average in the 2022/2023 winter season in the Sierra Nevada mountains.

Chart Layout Description

Bar-chart. bar: 4.

The chart has a horizontal X axis. Start value Nov, end value Feb.

The chart has a primary vertical Y axis. Start value 0, end value 180m.

Notes about the chart structure

horizontal bars

Number of reference lines: 0. Number of annotations: 0.

Display Keyboard Instructions

In our multi-screen world, users constantly switch between devices—smartwatch, phone, tablet, laptop, desktop. Current interface solutions face critical limitations. Traditional GUIs require separate designs for each device, increasing development time and creating fragmented experiences. Existing Zoomable User Interfaces use single-scale magnification that fails on screens with different aspect ratios. Users act as bridges between devices, increasing cognitive load and context-switching overhead.

When you zoom content on a landscape photo using standard magnification on a portrait phone screen, empty space dominates the display. The single-scale factor can't adapt to mismatched aspect ratios. This fundamental constraint reveals the need for a new approach.

FZUI introduces the Adaptive Magnification and Positioning Algorithm—the first zoom system to use separate scale factors for horizontal and vertical dimensions. This allows objects to fill available screen space efficiently across any aspect ratio, maintain spatial relationships and context, and adapt automatically without developer intervention. The algorithm calculates horizontal scale as viewport width minus margins divided by object width, and vertical scale as viewport height minus margins divided by object height. Objects scale non-proportionally to match the viewport, while smooth 60fps animations maintain spatial awareness.

The system is built on four design principles. Content automatically adjusts to screen size and aspect ratio—no media queries or responsive breakpoints needed. Information is organized spatially with meaningful zoom levels, allowing users to see both the forest and the trees without losing context. The same interaction model works identically across all devices: click or tap to select and zoom, pan to navigate horizontally, swipe for quick traversal. Objects maintain their positions during zoom, building mental maps through physics-inspired motion rather than abstract metaphors.

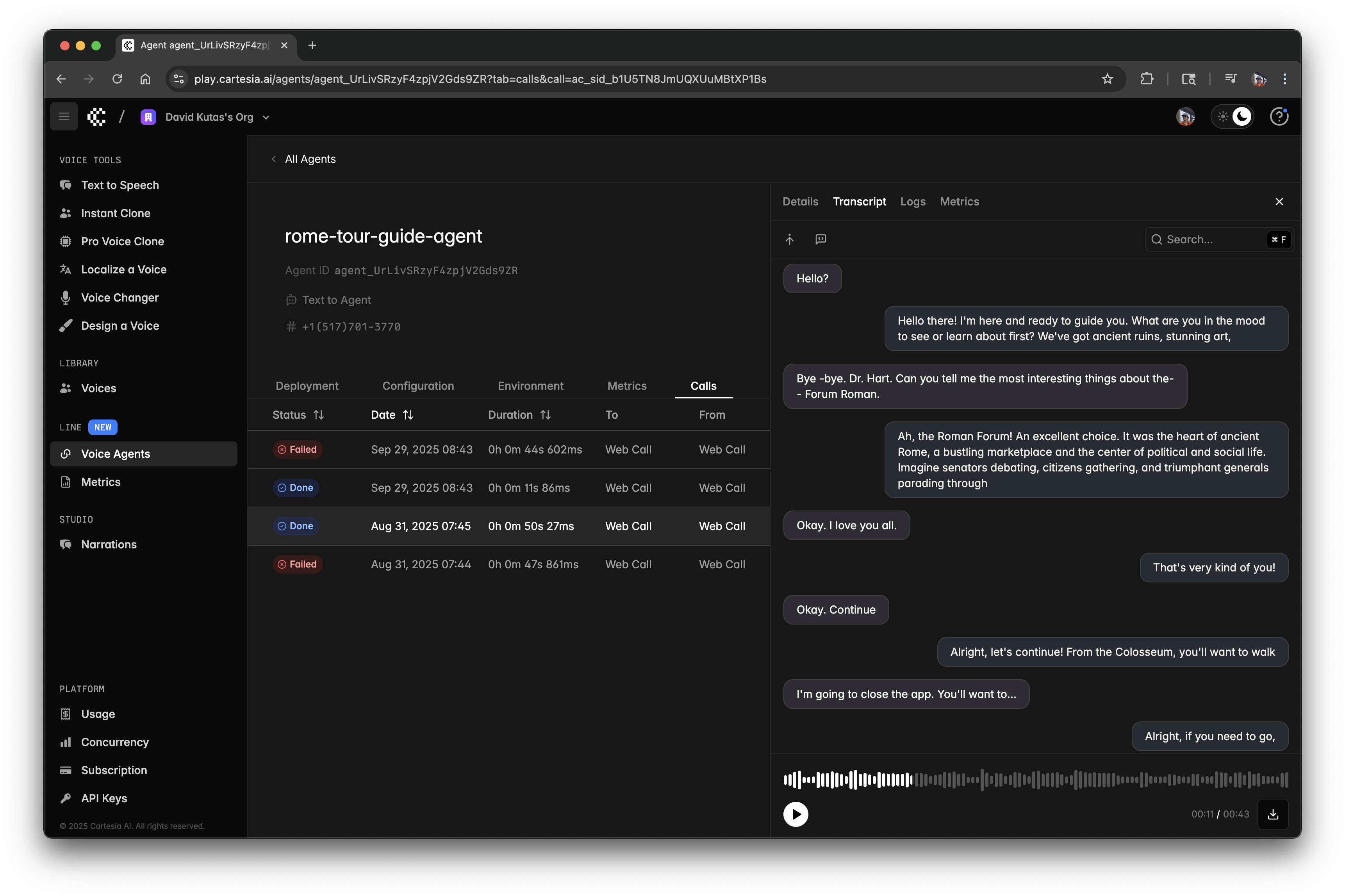

Linecept, a temporal project management tool, demonstrates FZUI in production. Timeline-based coordination inspired by Gantt charts used during the Hoover Dam construction provides a system simple enough for diverse teams yet powerful enough for complex projects. The tool features two zoom levels—overview mode shows all timelines while zoom mode reveals detailed cards and artifacts. Eight time resolutions span from seconds to centuries. Users navigate through time and space with smooth, performant animations, experiencing identical functionality on mobile and desktop. The implementation achieves 60 FPS animation performance across devices, efficient real estate usage on screens from 4" to 27", and binary tree data retrieval for seamless lazy loading.

Research validation evaluated FZUI against modal windows, drawers, and traditional ZUIs across criteria including available real estate efficiency, hierarchical support, cross-device applicability, and layout flexibility. FZUI exceeded other interfaces in all categories except ideal content type, where it optimized for conceptual rather than photographic content. Two user studies provided empirical evidence. A video-based preference assessment via Amazon Mechanical Turk (n=48) and a direct interaction study with UC Irvine students (n=32) revealed that 68.75% preferred FZUI over traditional interfaces. Statistically significant improvements appeared in orientation (p=0.001), understanding task context (p=0.066, marginal), smooth animated transitions (p=0.002), cross-platform usability (p<0.001), and overall look and feel (p<0.001).

Participants offered revealing qualitative feedback. "I like [FZUI] the best, because I can see everything in a flow, like a story," one noted. Another observed, "I can see the details, but I can also oversee everything in the environment when I zoomed in with less clicks." A third simply stated, "It feels natural for mobile."

The technical implementation leverages HTML5, CSS3, and JavaScript (ES6+) with React for component architecture. The MERN stack (MongoDB, Express, React, Node) powers the full application without external graphics libraries—built with fundamental web primitives. Performance metrics demonstrate 60 FPS animations on off-the-shelf hardware like the MacBook Air M1, efficient DOM management through buffer-based lazy loading, and binary tree data retrieval for scalable performance. The architecture embraces web-first deployment with multi-tiered cloud infrastructure, RESTful API design, and responsiveness to viewport changes without media queries.

This work contributes several innovations to the field. FZUI represents the first dual-scale magnification system for zoomable interfaces, creating a conceptual bridge that connects responsive design principles with physics-inspired interfaces. The temporal visualization framework separates temporal zoom from object magnification. The system establishes cross-platform consistency through a single interface model that works across all device categories. The research introduced "Fluid Zooming" as a new category within semantic ZUIs, expanding the taxonomy of interface design patterns.

Known constraints exist. The system optimizes for conceptual information like text, charts, and timelines rather than photographic content. Animated transitions may cause discomfort for some users with motion sensitivity. Novel interaction patterns require initial familiarization, presenting a learning curve. Tightly coupled components increase maintenance overhead and development complexity. Future directions include externalizing FZUI as a framework-agnostic toolkit, expanding empirical evaluation to cognitive benefits, conducting longitudinal studies in production environments, and adapting the system for spatial computing in AR and VR contexts.

FZUI demonstrates that Zoomable User Interfaces can thrive in the multi-screen era by embracing responsive design principles. The key insight: interfaces should be fluid like liquids, adapting to their containers while maintaining their essential properties. This work establishes that physics-inspired interfaces need not be constrained to desktop or specialized applications. With dual-scale magnification, we can create consistent, understandable experiences across the full spectrum of computing devices—bringing the promise of ZUIs into everyday use.